Research Themes

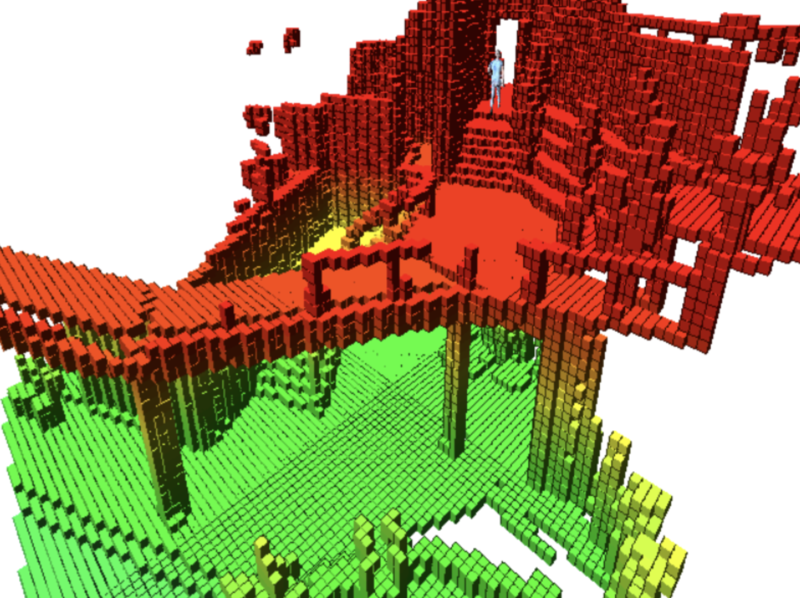

Human-Robot Interaction and Scene Understanding are two linked research themes that fall under the broader heading of Workplace Autonomy. In our Scene Understanding research theme, we specifically focus on understanding challenging environments using methods such as real-time depth estimation, localization, and mapping. Whereas the Human-Robot Interaction research theme we aim to develop a broader view of how humans and robots can safely and efficiently work together in a wider range of workplace contexts.

There are clear synergies between the two projects and, as we would be expected, the research teams involved collaborate closely. For example, many of the capabilities required are shared, such as ( i ) real-time depth estimation, localization, and mapping, (ii) point cloud motion estimation (iii) detection, tracking, and monitoring of people/assets, (iv) wireless communication and distributed computation, and (v) safe control of and interaction with any robots in the space.

Human-Robot Interaction

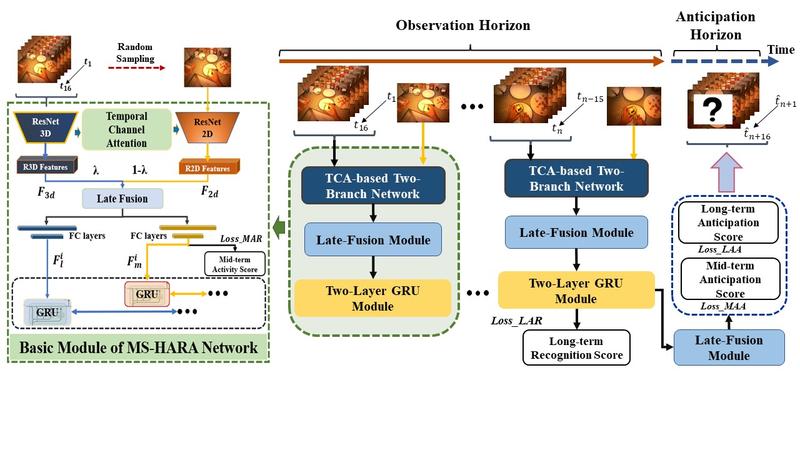

Although significant advances have been made in Human Activity Recognition (HAR) recently, the modelling of multiple time scale human activities remains an open issue. Multi-scale human behaviour is ubiquitous in daily life. For example, humans commonly need to perform a series of sub-activities in order to achieve a final objective. The combination of these sub-activities leads to specific long-term activities.

For more details please see research by Yang Xing.

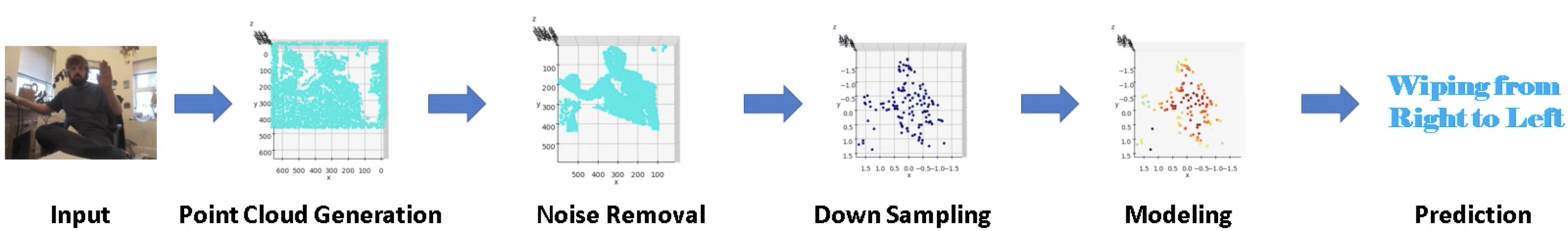

Scene flow is a powerful tool for capturing the motion field of 3D point clouds. However, it is difficult to directly apply flow-based models to dynamic point cloud classification since the unstructured points make it hard or even impossible to efficiently and effectively trace point-wise correspondences. To capture 3D motions without explicitly tracking correspondences, we propose a kinematics-inspired neural network (Kinet) by generalizing the kinematic concept of ST-surfaces to the feature space. By unrolling the feature-level normal solver of ST-surfaces, Kinet implicitly encodes scene flow dynamics and gains advantages from the use of mature backbones for static point cloud processing. With only minor changes in network structures and low computing overhead, it is painless to jointly train and deploy our framework with a given static model.

For more details please see research by Jiaxing Zhong.

Despite numerous recent works on both marker-based and markerless multi-UAV single-person motion capture, markerless single-camera multi-person 3D human pose estimation is currently a much earlier-stage technology, and we are not aware of any existing attempts to deploy it in an aerial context. The system we have built for this project is thus, to our knowledge, the first to perform joint mapping and multi-person 3D human pose estimation from a monocular camera mounted on a single UAV.

For more details please see research by Stuart Golodetz.

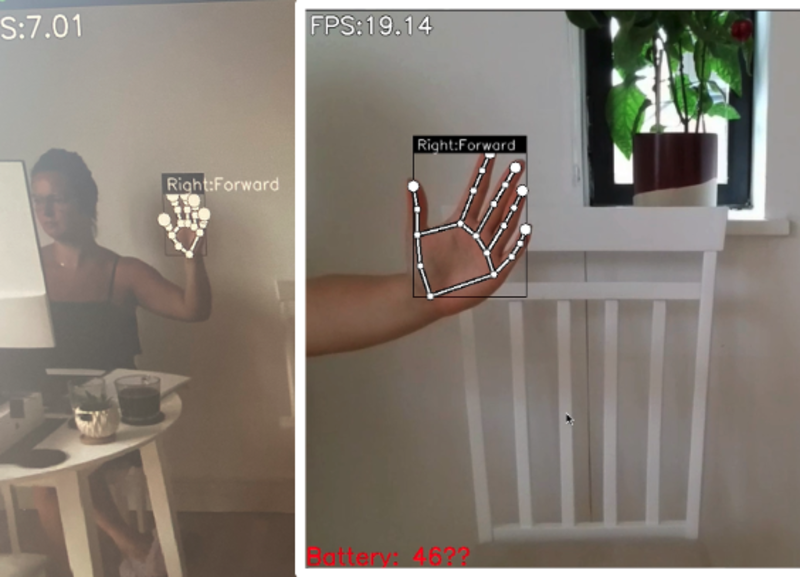

Beyond traditional single modality controller interfaces (e.g. joystick or buttons on a screen) there is little advancement for interaction design to enable intuitive human-machine interaction and collaboration with such robotic systems. Our work aims to explore the potential of multi-modal interaction for advancing the usability experience of human-robot interaction, specifically drones. We present a prototype system consisting of a head-mounted hand tracker and voice control on a laptop to support gesture and voice recognition which can be used for controlling a drone.

For more details please see research by Aluna Everitt.

Scene Understanding

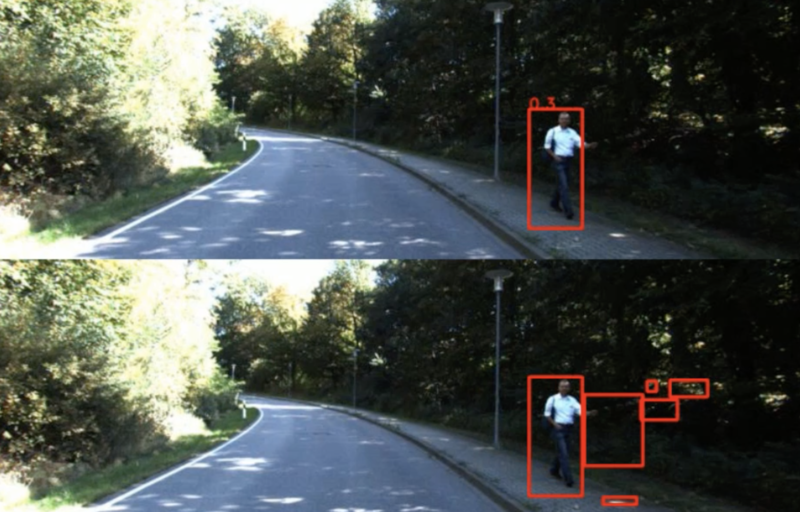

A challenging problem in visual scene understanding is to detect and track moving objects, which is made significantly more challenging when the camera itself is moving. In our project, we tackle this problem by adopting self-supervised learning. Our detection model uses a multiview reconstruction error as a supervision signal to learn confidence scores and bounding boxes for moving objects.

For more details please see research by Sangyun Shin.

Scene flow is a powerful tool for capturing the motion field of 3D point clouds. However, it is difficult to directly apply flow-based models to dynamic point cloud classification since the unstructured points make it hard or even impossible to efficiently and effectively trace point-wise correspondences. To capture 3D motions without explicitly tracking correspondences, we propose a kinematics-inspired neural network (Kinet) by generalizing the kinematic concept of ST-surfaces to the feature space.

For more details please see research by Jiaxing Zhong.

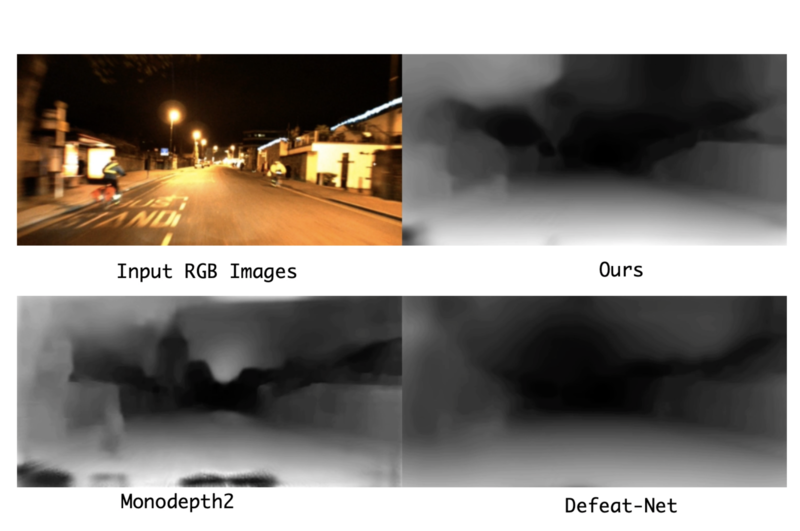

Self-supervised deep learning methods for depth and egomotion estimation can yield accurate trajectories without the need for ground-truth training data. However, as these approaches are based on photometric losses, their performance degrades significantly when used on sequences captured at night. This is because the fundamental assumptions of illumination consistency are not satisfied by point illumination sources such as car headlights and street lights. We propose a simple, yet effective, per-pixel neural intensity transformation to compensate for these illumination artefacts that occur between subsequent frames.

We propose a simple, yet effective, per-pixel neural intensity transformation to compensate for these illumination artefacts that occur between subsequent frames. In addition, we extend the rigid-motion transformation generated by the estimated depth and ego-motion to address issues of dynamic objects, noise, and blur using a sparse-reconstruction residual module. Lastly, a novel delta-loss is proposed to mitigate occlusions and reflections by making the estimated photometric losses bidirectionally consistent. Extensive experimental and ablation studies are performed on the challenging Oxford RobotCar dataset to demonstrate the efficacy of the proposed method, both in day-time and night-time sequences. The resulting framework is shown to outperform the current state of the art by 15\% in the RMSE metric over day and night time sequences.

For more details please see research by Madhu Vankadari.

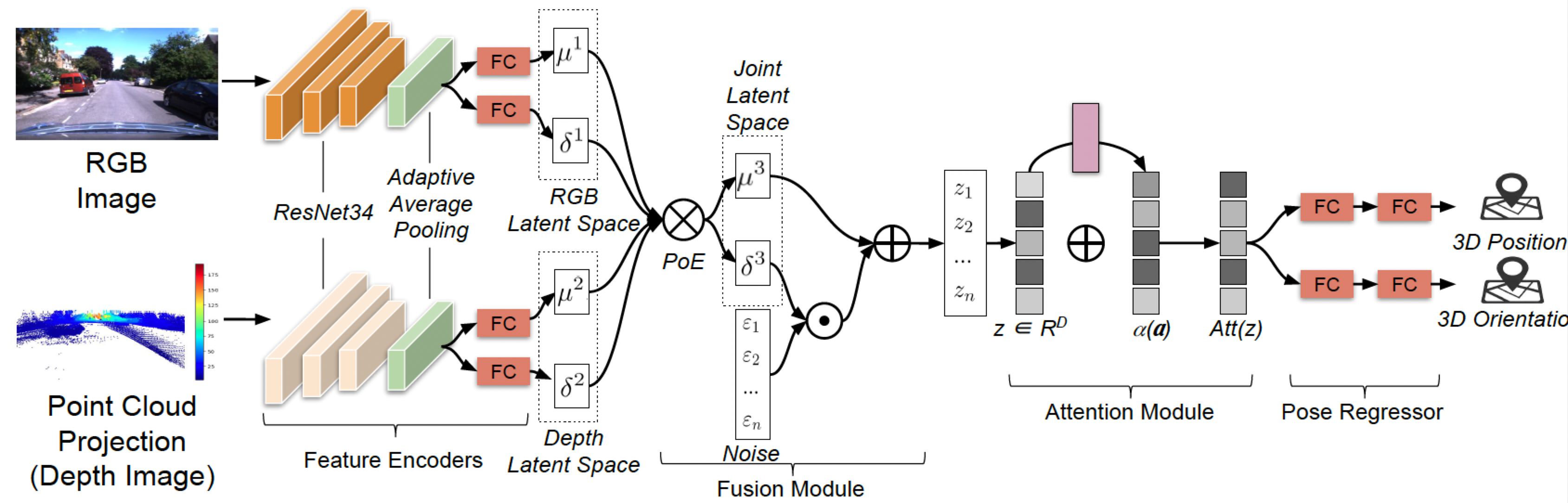

Recent learning-based approaches have achieved impressive results in the field of single-shot camera localization. However, how best to fuse multiple modalities and to deal with degraded or missing input are less well studied. In this paper, we propose an end-to-end framework, termed VMLoc, to fuse different sensor inputs into a common latent space through a variational Product-of-Experts (PoE) followed by attention-based fusion. Unlike previous multimodal variational works directly adapting the objective function of vanilla variational auto-encoder, we show how camera localization can be accurately estimated through an unbiased objective function based on importance weighting.

For more details please see research by Kaichen Zhou.

Our research is supported by Amazon Web Services (AWS).